|

I’m working on my Python programming. I’ve used Python for my job a few times, but wanted to get some more practice with it. There are a few things I’ve been doing to try to improve.

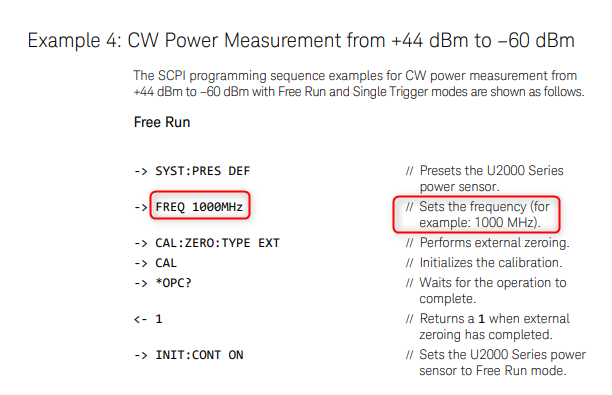

Right now I'm using VS Code on Linux which seems to be working great. There's not exactly a REPL available, but I'm still liking it. This is a list of best practices I try to remember for myself when I do my work. I don’t always succeed in doing everything on the list, but this is what I strive to do. I realize some of these things might be part of a process you have to follow as a test engineer and if so that’s great. However, there are lots of times when just your own best practices are all you will have to go on. In those times having some discipline for yourself can save a lot of pain down the road. 1. Don't trust a measurement I've been tricked by measurements pleanty of times. When writing a new test with an unfamiliar instrument, there are a lot of things that can go wrong. Before you send a SCPI :meas and :fetch and call it good, there are many factors to consider. First, I try to understand how the instrument I am using works. It pays off to carefully read the manual. I don’t mean sit down and read the whole manual, but I’ll start with an example that is close to what I want to do and try to understand how it works. I might try removing some of the commands and see what happens. I often find I need to look up the individual commands and see what the description is. Here’s an example. I’ve written before about how I used a Keysight U2001A power sensor on a project a while ago and I remember how when I first looked at the examples they all have this frequency command in there. Like this: You can see it just says FREQ 1000MHz and the comment doesn’t add much explanation. At first you might think that you are setting the instrument to measure the frequency of interest. Well, remember this is a power sensor and it inherently is a broadband measurement, and there is no frequency selectivity. If you dig into that command you’ll learn that this is a command to query previously stored frequency specific measurement offsets from memory on-board the instrument. Finally, when you think you have your measurement correct, find a second way to verify it like with a different instrument.

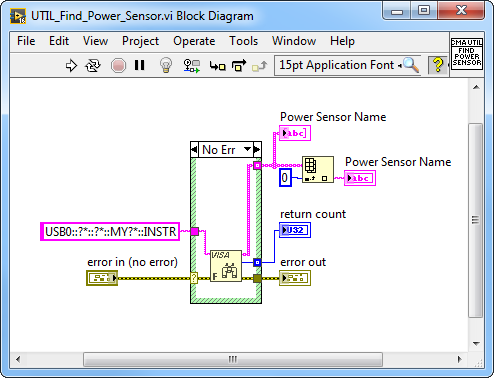

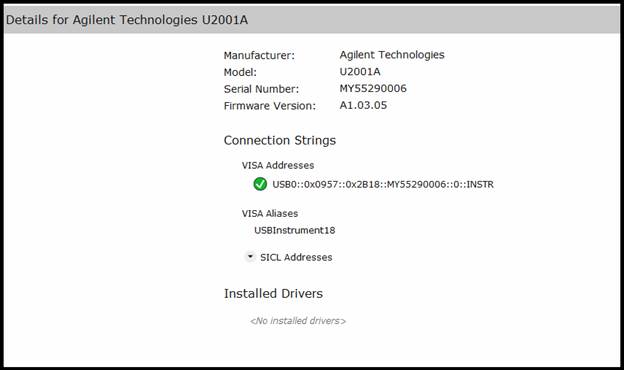

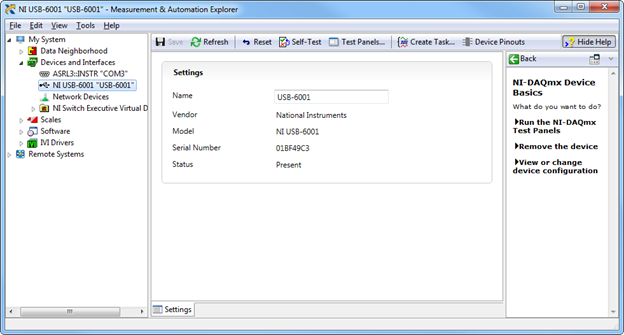

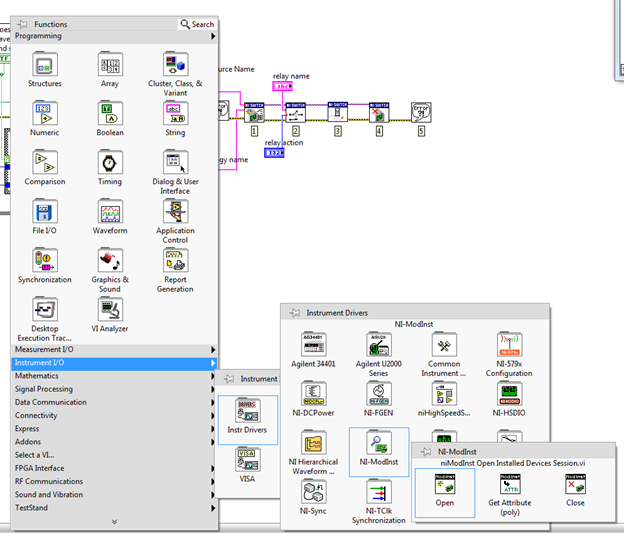

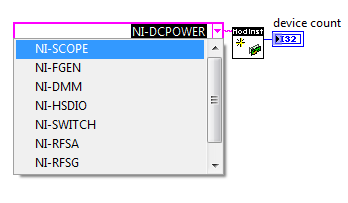

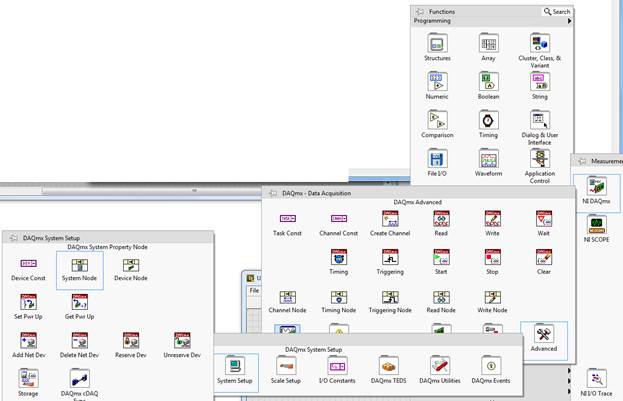

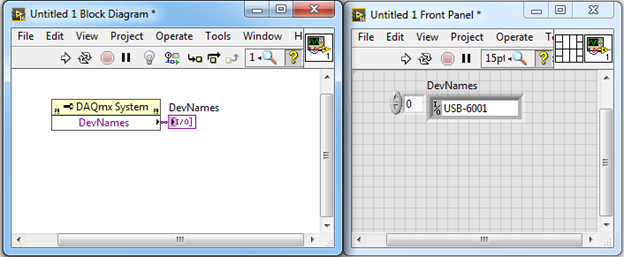

2. Write everything down This sounds like a pain and it’s easy to skip, but I find it’s more efficient in the long run to create a log of my work and debugging. I like to use OneNote, but I suppose anything would work. Start with a blank page or a page is dedicated to this task. Then, as you work through a problem take pictures of any important physical setups and write down settings or commands. Also record measurements and results that you get. This seems to help me from getting stuck in the short term and it's often useful to show others what I have tried to get something to work or just remember what I did. Another benefit is you can often reuse pictures and step-by-step descriptions to write really good documentation. 3. Get out and talk to people Not to put a label on engineers here, but it may not be in every engineer’s nature to go out and talk to people. In the course of a project, I try to get out of my cube and talk to other test engineers, talk to manufacturing, quality and design engineers. People generally like to help and they can often save you a ton of time. Weather it’s for a technical problem or a project management type problem, the more you can get involved the better. It also helps to learn who knows what, because you don’t have to know everything yourself, but you need to know who knows everything so you can ask them. Lots of communication! 4. Study the R&R I’m not crazy about using formal Gage R&R to evaluate test systems and electrical measurements, but it’s still worth doing to get an idea of how repeatable and reproducible your measurements are. I usually try to do a simple study where the test systems function as operators and test maybe 5 or 10 DUTs across three test systems. If you happen to have access to Minitab, it has a good tool called Gage Run Chart. It will give you a good visual evaluation of repeatability and reproducibility without all the percent of variation stuff that comes in the full R&R. All of the statistics produced by the full R&R can often just be misleading. Of course, in some industries there is a strict validation process for tests and test systems that will dictate exactly what to do. 5. Show my system to the users Put another way, work with the customer. I want to show my software and fixture operator interfaces to the people who are going to be using them as early as I can. It might not be fun, but it’s easier to get the feedback earlier when it’s still easy to change because they are going to make me change it one way or another. Lots of communication! 6. Try to break it Everyone has heard this when developing systems and software. Try to validate the system and perform all the tasks an operator would see. Make sure the test system can catch the defects it is intended it to. Try to think of ways the mechanical fixtures could be confusing or cause damage to the DUT. There is the Lean concept of Poke Yoke, that is making things mistake proof, I want to always keep that in mind. Think about how can a test system be abused or misused just to get the test to pass. 7. Be careful of stuff you don't control Oh man, I’ve been scorched by this before... Be careful if you have to get support from other parts of your company to do your testing. Especially if this support isn’t really what they would consider to be their job (even though it totally is). For example, if you are doing a test and you need the firmware department to build you a special firmware to perform your testing, this can be dangerous because it really only helps you and gives them extra work. I don’t mean this to sound like you can’t trust anyone type of a thing or everyone is against me type of thing, but it comes down to a lot of communication and early planning if support from other groups is required for success. Lots of communication! 8. Be patient and try to do it right the first time There is often project pressure and it can feel like people seem to forget that products need to be tested and manufactured. Try to resist the urge to, “just get something out there” and fix it later. It may seem like there is a lot of pressure to get done, but the pressure will get worse when the test isn’t working in manufacturing. Often the problem is just communicating with the team that the test isn’t ready and people will be willing to work with situation. This one can be really hard, but a test group the slaps everything together will end up in a huge hole after a few years of this. 9. Use high quality equipment I have this joke that every time I tell my manager how much something will cost they say “merciful heavens!” and then faint. Seriously though, I do think it always pays off to buy high quality equipment from real instrumentation companies. In general, avoid using USB instruments and devices in your test system. This is just my opinion, but I’ve had issues with problematic USB communications. At the very least use USB instruments from reputable vendors like Keysight and NI. Try to use a PXI chassis or even though it’s old, GPIB. Use an industrial computer with a consistent operating system. I’ve seen lots of times where test systems that are supposed to be the same are running on random PCs with different versions of Windows, 32-bit, 64-bit etc. I sometimes feel like I’m the only one who cares about this, but it’s important to get your computers from a real industrial computer supplier whose business it is to ensure a consistent setup. 10. Get stuff done I don’t know if this will make sense, but sometimes it’s easier to just wait for things to sort of figure themselves out, but resist this temptation, really try to get things moving and done. When I feel stuck, again the solution often seems to be lots of communication! I wanted to add something related to my last post. Since I wrote those posts on TestStand I have been through a lot of pain with TestStand and have gained a lot of new experience. The source of the pain has been trying to deploy some TestStand systems into our manufacturing environment. I ran into a problem with the specific technique of providing an alias to an instrument and using it in a deployed system. The situation was I was building TestStand systems in the office and testing the installation on my computers there. I then had to deploy these systems in our manufacturing plant onto computers that were new and newly baselined. As you may know, it can be difficult to test a deployment on a system that has previously had software installed on it. If you uninstall things get left behind and it’s not always possible to easily create a restore point. I’m sure there are solutions to this problem, but I have not dealt with that yet. My test sequence required me to assign an alias to a Keysight instrument in NI-MAX, but MAX was not recognizing the instrument after the deployment. I had installed the Keysight connection expert software and VISA drivers, but I suspect some NI instrument driver didn’t get included in my installation. The main problem is if MAX does not recognize the instrument, there is no way I can assign an alias to it. That means my deployment doesn’t work and I have to install TestStand to modify the sequence file, or I have to rebuild the deployment with the new changes. Well, I didn’t like doing this and found a simple way to avoid having to assign an alias to every instrument I assign. LabVIEW includes a VI called “Find VISA Resource.” See Figure. The Keysight instrument. I wrote a little wrapper VI around this VI to search for the correct instrument, but search in a generic way related to the specific instrument instance (the serial number). If I open up the Keysight (Agilent) Connection Expert software I can see the format of the VISA address and this matches the format string I’m inputting to the Find VISA Resource VI. The string is basically this format for all USB instruments, but the key here is that this instrument’s serial number always starts with “MY” including that plus the search question mark plus wild card should keep this code from finding the wrong instrument. Plus, I don’t expect any other Aglient instruments to be connected to this system. I feel this is a good fix for having to provide an alias in NI MAX. However, I have noticed that this will not work with NI hardware like a DAQmx usb instrument. It shows up like this. From what I read here DAQmx does not use at resource string scheme like other instruments. This page and example shows to use this NI-ModInst tool. I have not used it for real, but just looking at it quickly it appears to only find PXI instrument. It is not seeing my USB DAQmx instrument. Digging a little more a DAQmx device name can be found with the DAQmx System Property Node. See here. Here is how to get to it and you can see it returns the device name alias. What you can do with this is make this VI contain a control with the name and send this into TestStand as a variable. All set.

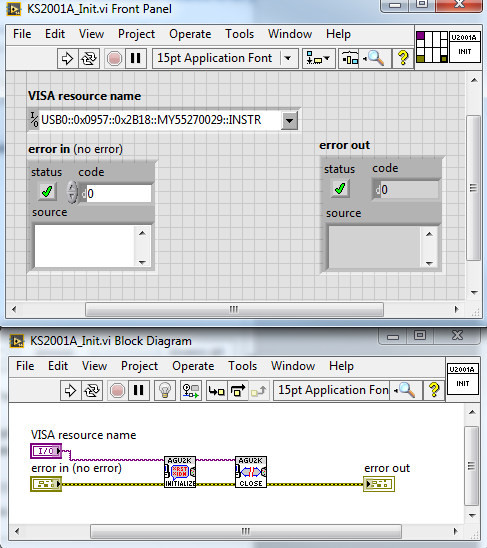

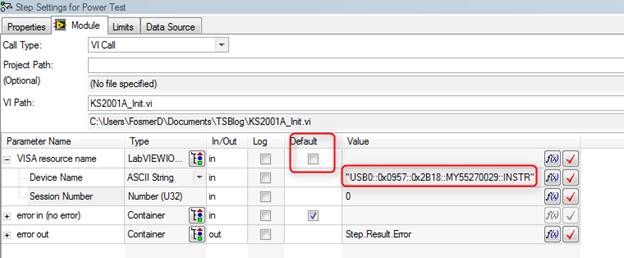

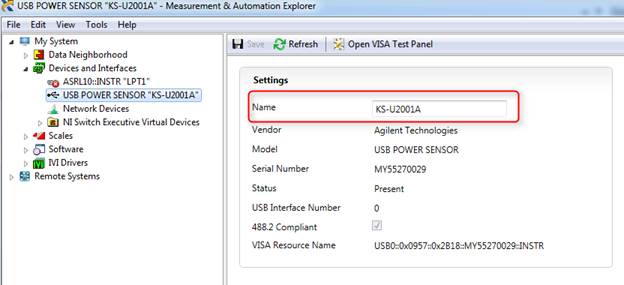

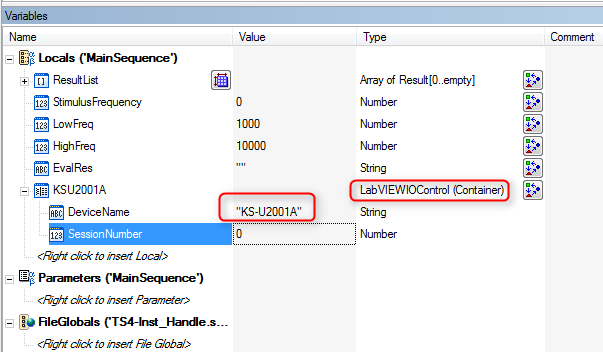

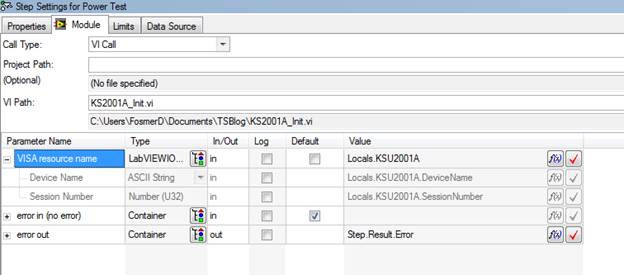

Today I’m going to show a good way to add an instrument handle to a TestStand sequence. I’m using a VISA USB instrument, the Keysight U2001A power sensor. I’ve written about this instrument before. First I’m going to create a little VI that will call the initialization driver of the U2001A power sensor. You can see in Figure 1 that there is a VISA resource name that is specific to this instrument. The issue with this is when this VI is called in TestStand the string has to be saved in the sequence file and it can only ever use that specific instance of the power sensor or else we have to modify the sequence file. In TestStand it would look as shown in Figure 2. Either the Device Name is set as a hard-coded string or it is set to default from the VI that contains a hard-coded string. A better way to do it is to set an alias for power sensor in NI-MAX and then put that alias into your VI and sequence. Figure 3 shows setting an alias in NI-MAX in the Name field. Now in the sequence file, there is a special variable type just for this purpose called LABVIEWIOControl and the DeviceName property of it is set to the alias of KS-U2001A. The SessionNumber property should stay at zero. This is shown in Figure 4. Going down to look at the module adaptor shown in Figure 5, the local variable is set to the new KSU2001A variable. This could also be a good variable to make global, that way you can access this instrument in any sequence or sub-sequence. SummaryIn this post I went over how to use NI MAX to create a VISA resource name alias to be used by a sequence file to access and instrument. The advantage of this is you do not have to change the specific VISA name stored in your sequence file to match the specific instance of the instrument connected to the computer running the test sequence. However, you still have to create the same alias in NI MAX for that instrument on any computer that will run the sequence file. Source CodeFind the source code for this post here

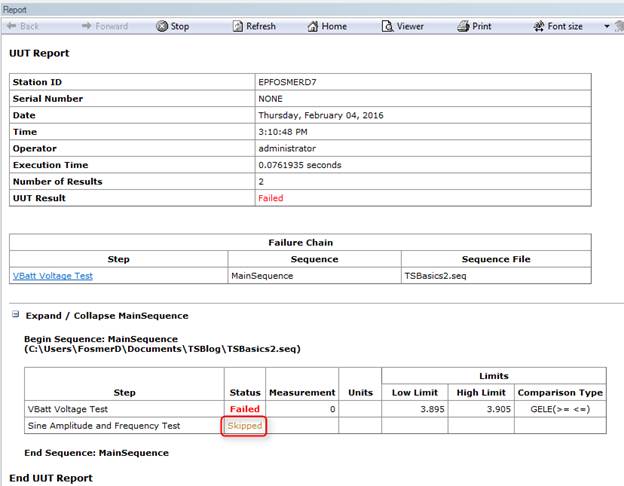

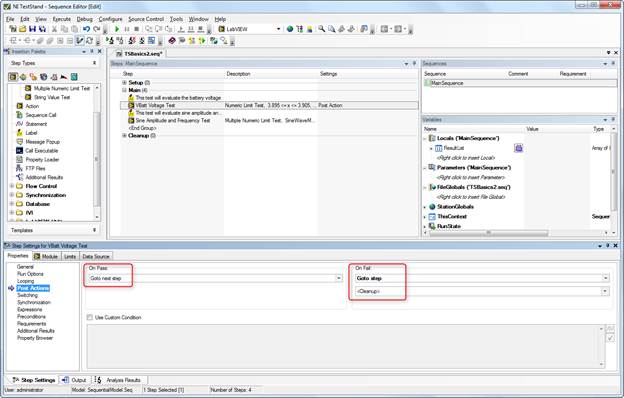

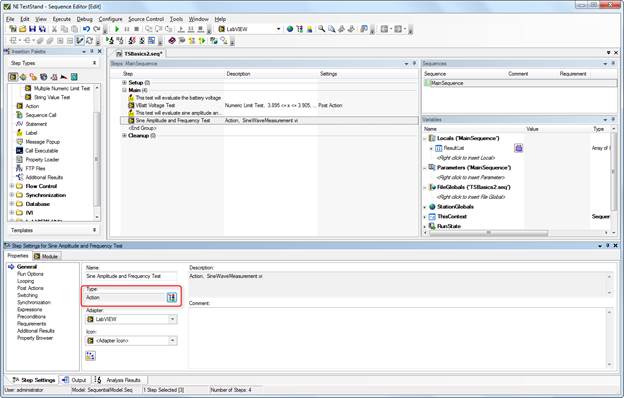

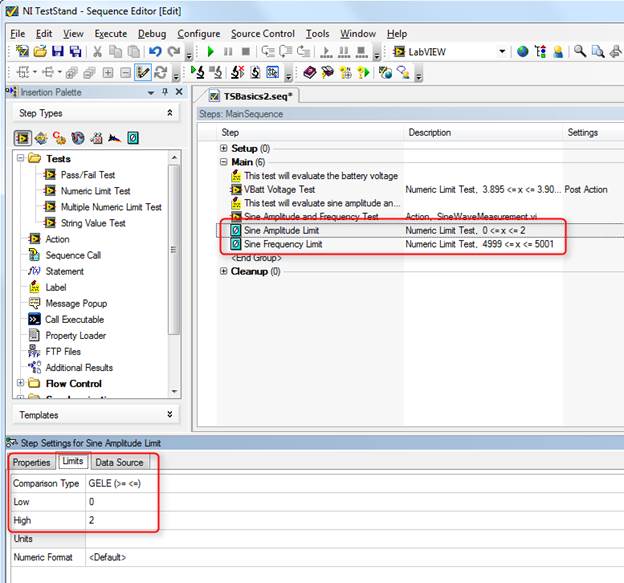

In part 2, I talked about preconditions, post actions and some other ways to add limits to tests. In this part I’ll continue looking at some of the other step types, specifically:

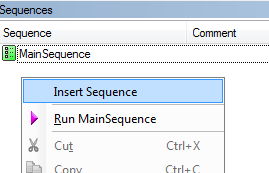

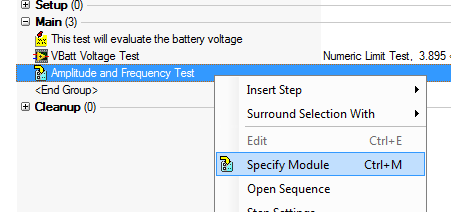

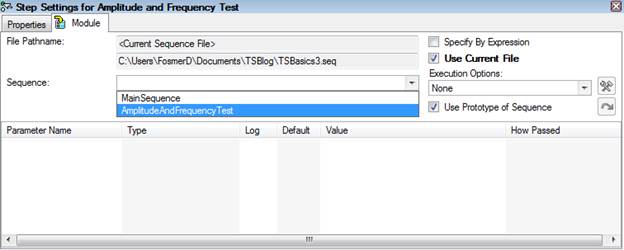

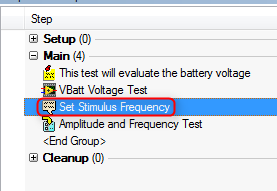

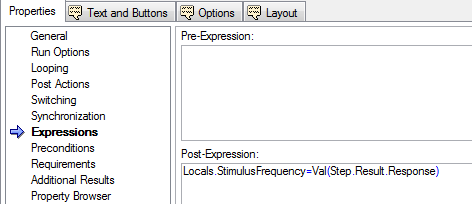

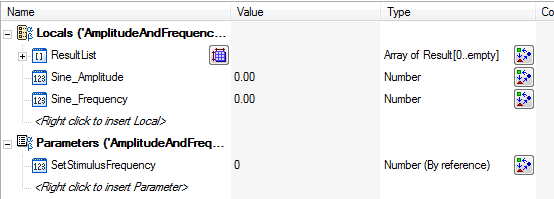

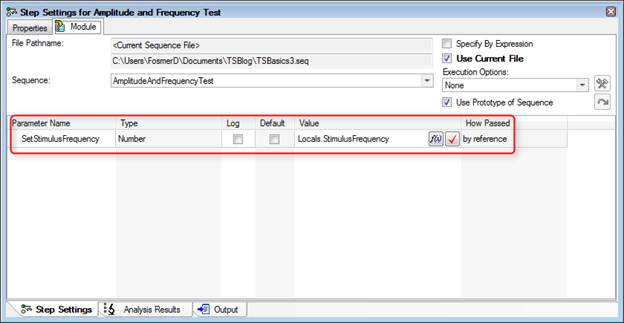

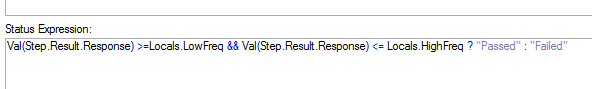

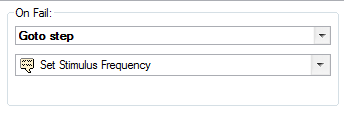

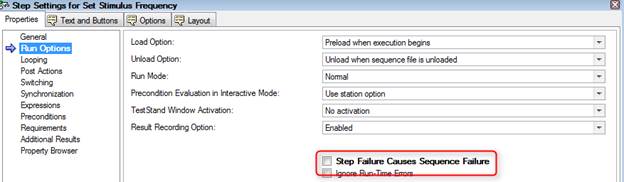

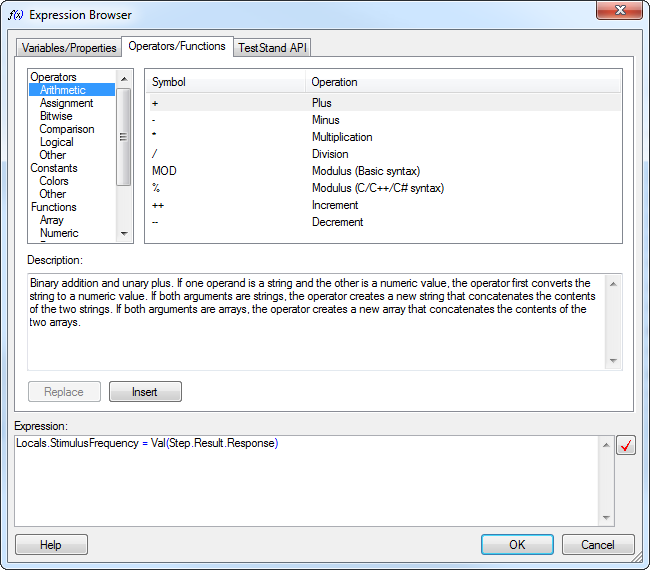

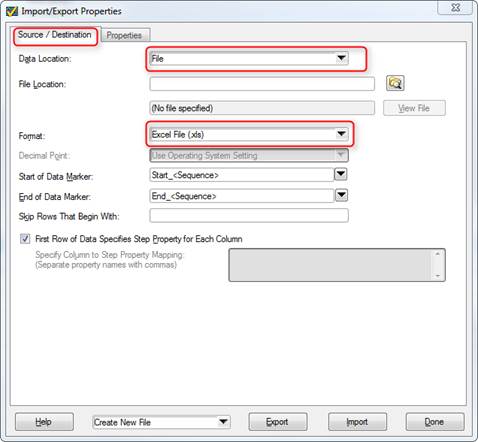

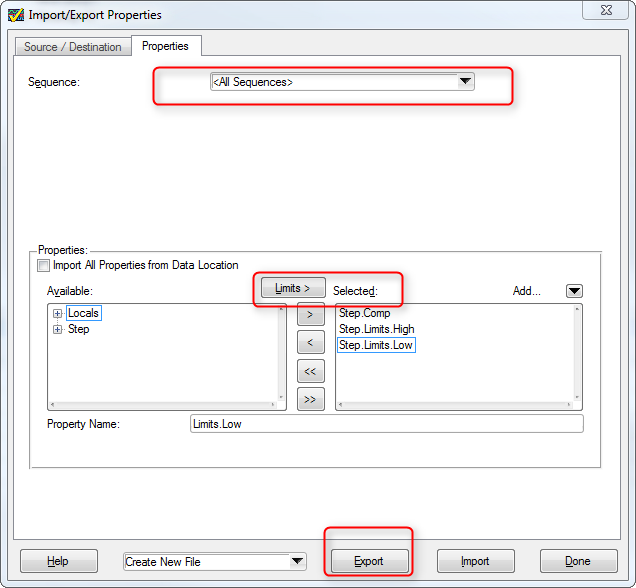

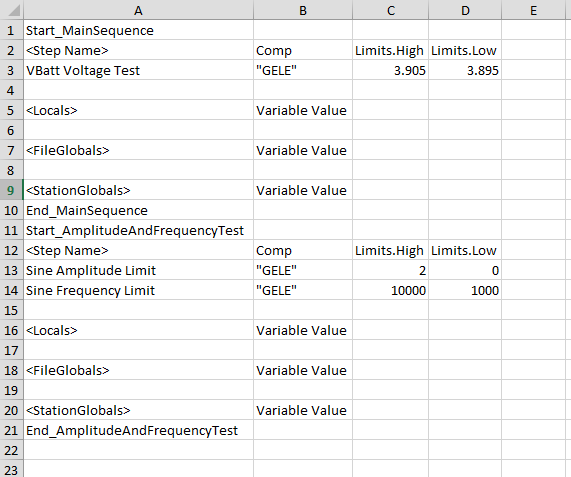

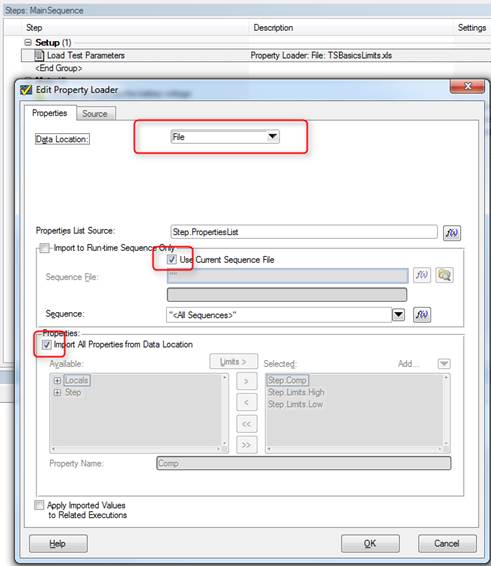

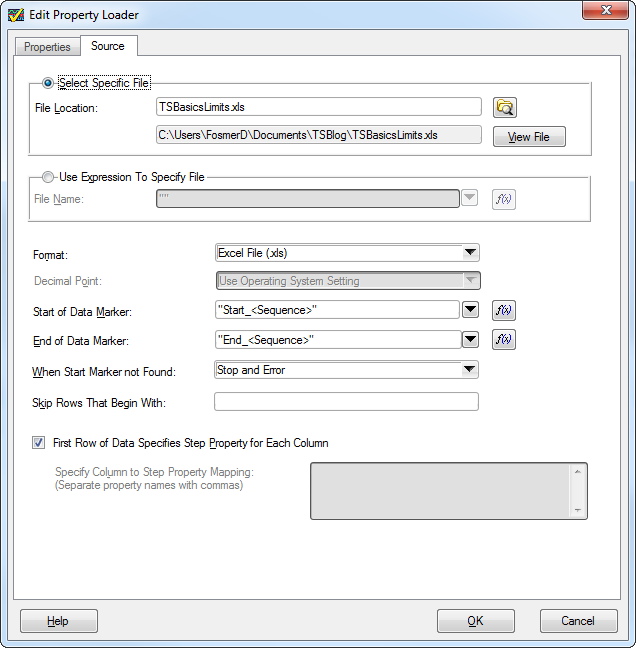

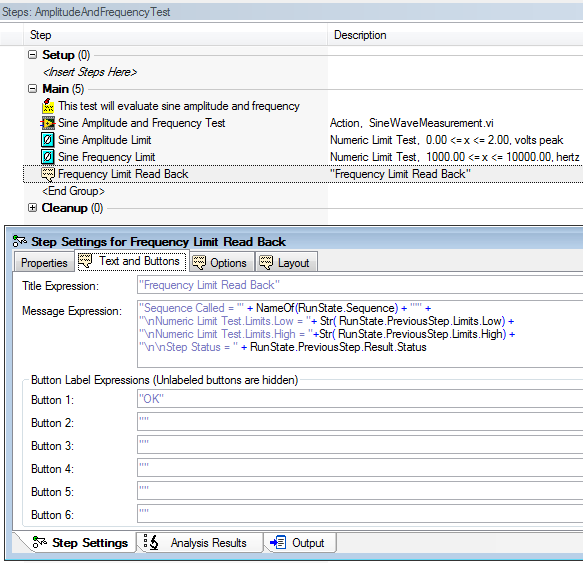

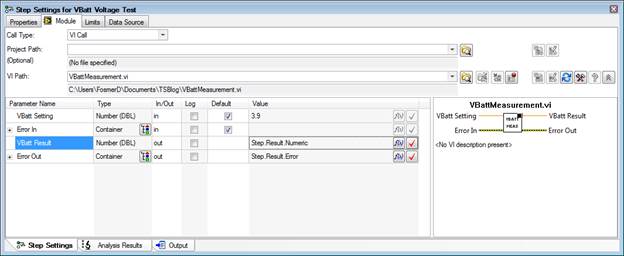

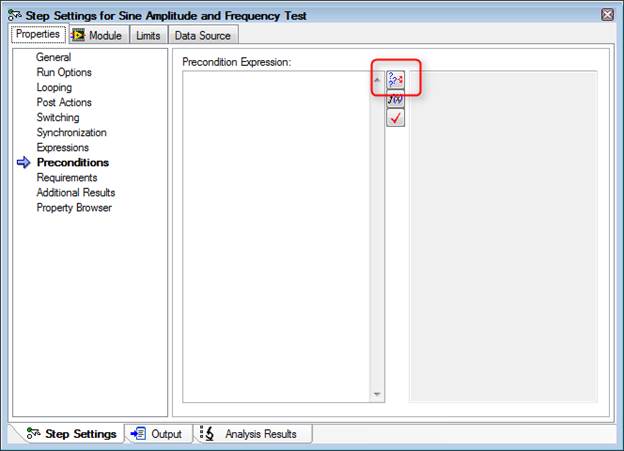

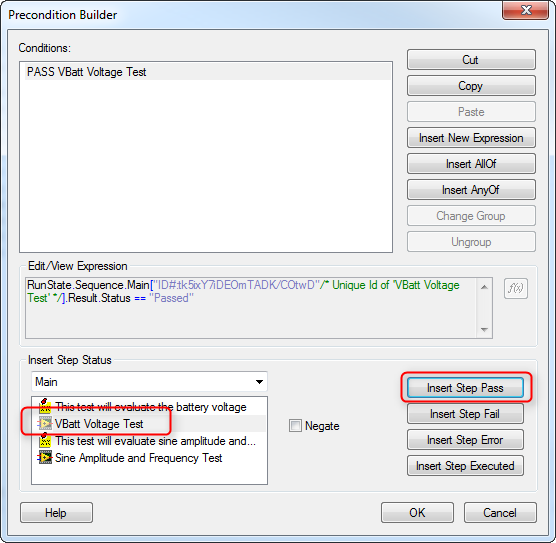

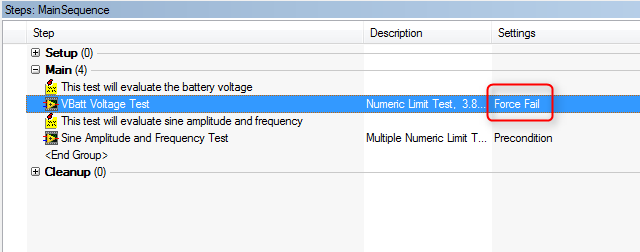

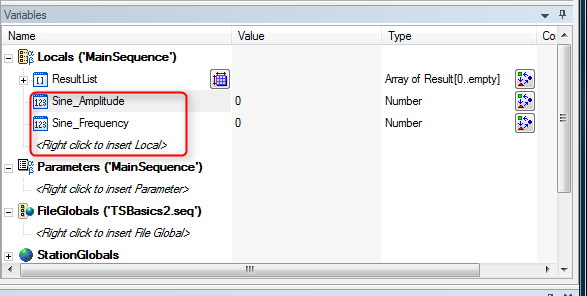

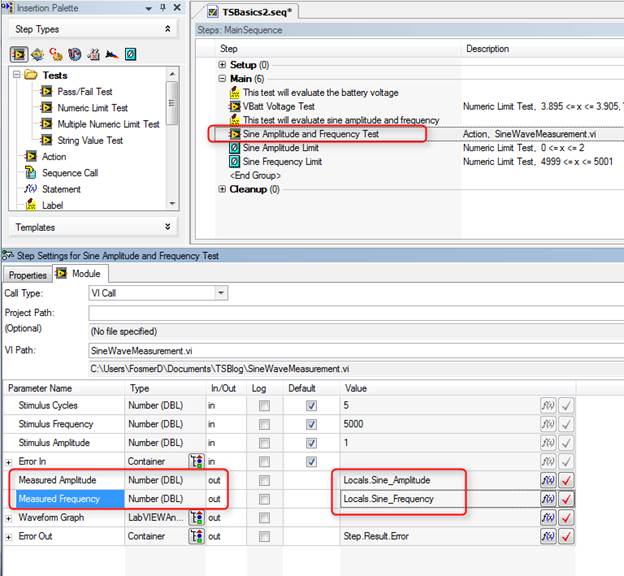

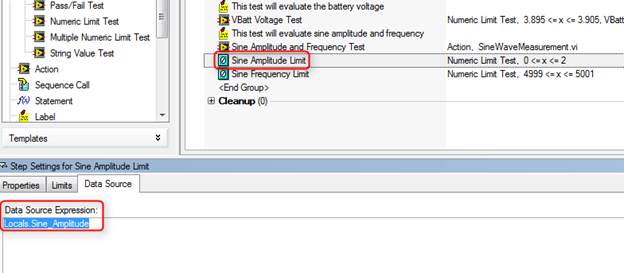

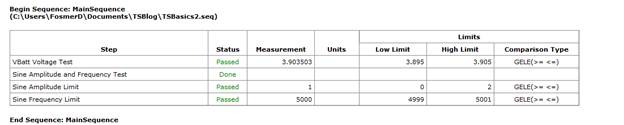

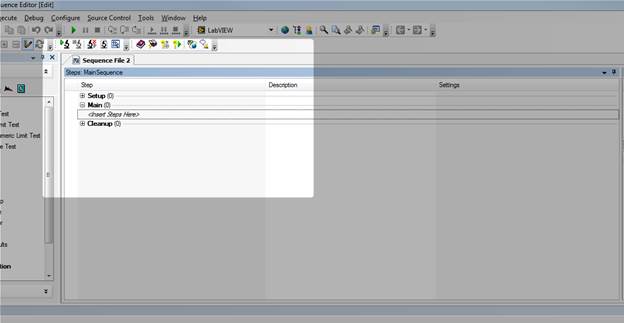

Creating a sub-sequenceIf you have ever read anything National Instruments puts out on good code development practices they always talk about coupling and cohesion. That is, making code modules that are loosely coupled and have high cohesion. Coupling means that code modules are for the most part independent and not too “coupled” together. Cohesion means that the all the code in one module is there to perform the one purpose of that module. One way to build cohesion into a TestStand sequence is to make the separate tests (or whatever logical division you are using) into their own sequences. You can either have a sequence file call other sequence files or you can create a subsequence that is called from a main sequence. The difference between the two is that subsequence is not visible in file directory, it’s just stored in the main sequence. Starting with the TSBasics2.seq sequence file from part 2 and renaming it TSBasics3.seq, I’ll show how to turn the Sine Amplitude and Frequency Test into a subsequence. Figure 1 shows the sequences pane, right click and select insert sequence. Name the new sequence AmplitudeAndFrequencyTest. Cut and paste the steps from the MainSequence into the new AmplitudeAndFrequencyTest sequence. At this point this new sequence is still not called, so we have to drag a sequence call step from the Insertion Palette into the MainSequence and set it to call the new AmplitudeAndFrequencyTest sequence. Rename the sequence call, as shown in Figure 2, right click and select Specify Module. Then, click on the Use Current File check box and select AmplitudeAndFrequencyTest from the drop-down list as shown in Figure 3. There is one more thing to fix. Since we used local variables in the main sequence to move the amplitude and frequency measurements into the limit steps, we have to put those local variables in the new AmplitudeAndFrequencyTest subsequence. These can just be cut and pasted from the main sequence. Message popup and passing parametersNow that we have a subsequence in order to interact with this subsequence in a way that maintains good cohesion we have to add parameters to the subsequence. First, let’s use the Message Popup step type that will allow the user to set the stimulus frequency. This message popup will set the users input to the local variable called StimulusFrequency. Then we will add a parameter to the AmplitudeAndFrequencyTest subsequence called SetStimulusFrequency which will be passed to the VI for testing and we will see the result on the report. Add the message popup step type (Figure 4) Create a local variable in the main sequence called StimulusFrequency. On the message popup, rename it to “Set Stimulus Frequency” and go to the Expressions tab of the step properties. Add the Post-Expression, Locals.StimulusFrequency=Val(Step.Result.Response) as shown in Figure 5. This will assign the user response to the local variable and the Val function will convert the string to a number. Go to the Options tab of the Message popup and click the Enable Response Text Box option. This is what allows the user to input a value. The next task is to go into the Amplitude and Frequency Test sub sequence and create a parameter called SetStimulusFrequency shown in Figure 6. This will be how the sub-sequence is passed the stimulus frequency from the upper level main sequence. To add this as a parameter, from the MainSequence we click on the Amplitude and Frequency Test sub-sequence. Select the SetStimulusFrequency parameter and use the Value field to set the value that will be passed to it. Here we are using the local variable StimulusFrequency. See Figure 7. Another thing we should do when adding user input is validate that input. The user input is accepting a string and we are converting it into a number, we should make sure that is a valid number. Right now the limit on frequency is 4999 to 5001, let’s open that up to 1000 to 10000. I’ll make two locals holding these values, 1000 and 10000. Then I’ll add a status expression to make sure the entered value is within this range. Figure 8 shows the expression. If the input is not in this range, then the step fails and we’ll add a post action to repeat the step on failure shown in Figure 9. We also have to go the Run Options tab in Figure 10 and uncheck the Step Failure Causes Sequence Failure option, or any incorrect entry will cause the test to fail. Now, if you enter a number smaller or larger than 1000 to 10000, it will just pop back up to reenter. This isn’t totally fool-proof, for one thing there is no dialog explaining that you entered an invalid value and you could probably enter text into the dialog that would convert to a number in this range and the sequence would pass with some random input. Another problem is the validation values are not tied to the actual limits, so there could be a mismatch. So… room for improvement. This last step also gave us a chance to write some expressions. Expressions are very powerful, but I’ve found they can be tricky and the help isn’t always easy to find. The expression browser shown in Figure 11 is probably the best bet and a lot of experimenting and learning has to take place. Using the Property Loader The last thing I’m going to look at today is the Property Loader. The Property Loader is a step type that will load properties from a file or database. This is super-useful because you can keep things like your test limits in a separate file and change them without having to change the sequence file itself. It’s pretty easy to set this up, I’ll demonstrate with loading properties from Excel. First drag a Property Loader step type into the Setup step group of the main sequence. This is the perfect type of operation to put into the Setup step group of the as it fits well into the logical category of setup. Since we already have all are limits setup in the sequence file and sub-sequence it’s easy to create the limits Excel file. Click on Tools -> Import/Export Properties… On the Source / Destination tab select file and Excel, on the Properties tab select all sequences and click the limits button. Click Export, give it a file name and click done. See Figure 12 and 13. Now let’s take a look at the file that was created in Figure 14. You can see that the limits we previously setup are in there along with empty fields for locals, file globals, and station globals. Going back to the main sequence, click on the Property Loader step we added and click Edit Property Loader… in the step settings. Make the settings shown in Figure 15 for the Properties tab and shown in Figure 16 for the Source tab (navigate to your property file you created as your file path will be different). Next thing I added, that is optional, is a message popup to the AmplitudeAndFrequencyTest sequence to show the limits that were read from the limits file. Figure 17 shows the expression needed to create this popup message. This is just to illustrate that the limits are being pulled from the file at this point and not what is saved in the sequence file. We don’t have to do anything to the sequence file to get the limits applied to the test results, that’s all handled in the property loader. One thing we may want to do is simply zero out the limits in the sequence file so no one is tricked that the limits are stored there. This may call for a Label comment as well. The bad thing about zeroing the limits is now when you read the sequence file the descriptions would just list limits of zero. So, it’s up to you what you like. SummaryThat’s all I’m going to go over in this post. I worked through adding a sub-sequence with a parameter, a message popup and wrote some statements to validate the input. I also showed the basics of using the Property Loader to load limits and other values from an external file. Source CodeFind the code for this post here RelatedIn Part 1 on TestStand I programmed the very minimum to get a test system going. In this part, I’ll add some more steps and show a lot of other useful features. This will mostly involve test steps and flow control. Using A PreconditionThe first thing I did was add a new test VI, a battery voltage test. Note again that this isn’t a real measurement, but for this one I added a little randomization so the results are somewhat more of a realistic simulation. Figure 1 shows the battery voltage test VI. I have added this new test to my sequence file before the sinewave test, this time I used the Numeric Limit Test adaptor as this VI has only one output that I want to apply a limit. You can see from the code above that the maximum range the simulated VBatt Result can be is 3.895 to 3.905, so that is what I set the limits to. It’s slightly easier to setup the Numeric Limit Test than it is to setup the Multiple Limit Numeric Test. First I go to the Limits tab of the Step Settings to enter the high and low limit as shown in Figure 2. Next I went to the Module tab and set the VBatt Result Value expression to be Step.Result.Numeric. This is shown in Figure 3. This again is using TestStands built-in results collection. That it, then the Data Source tab is automatically set to Step.Result.Numeric as well. The next thing I’m going to do is apply a precondition to the sine amplitude and frequency test. A precondition is an expression that is evaluated before a step executes. I’m going to create a precondition that says the Sine Amplitude and Frequency Test will only execute if the VBatt Voltage Test passes. Since this is such a common task there is a very quick way to write this expression. Go the Sine Amplitude and Frequency step and go to the Properties tab of the step settings. Click on Preconditions and click the Precondition Builder icon shown in Figure 4. In the Precondition Builder dialog box click the VBatt Voltage Test step, then click Insert Step Pass button and that’s it. This is shown in Figure 5. Save the sequence file and in the Execute pull down menu click Single Pass. The sequence will run and you can see from the report that both the VBatt Voltage and Sine Amplitude and Frequency Tests pass. To test the precondition we can force the VBatt Voltage Test to fail. Right click on the VBatt Voltage Test step and click Run Mode -> Force to Fail. You can now see in Figure 6 the settings for that step it is set to Force Fail. Run Single pass again and we can see the Sine Amplitude and Frequency Test is never run and set to a status of skipped. Figure 7. Using a Post Action instead of a PreconditionThere are lots of ways to do things in TestStand. For example, I’ve worked with some engineers who don’t like to use preconditions because they feel it hides test flow inside the test step where you can’t see it. A way you can avoid preconditions that also saves quite a bit of work is to just program the conditional step to go to cleanup on fail. Say there were 4 other tests along with the Sine Amplitude and Frequency test and we don’t want any of them to execute if the VBatt Voltage Test fails, we can just tell the sequence to go to the cleanup step on failure of the VBatt Voltage Test and we can skip all the preconditions. Here under the Post Actions property you can see in Figure 8 we’ve left the behavior for on pass to default as go to the next step, but changed the on fail to go to step cleanup. Making limits their own stepAnother method to set test limits is to make them their own test step. This can be nice because if you have multiple limits in one sequence step they are hidden away and not easily view without opening the step. Putting each limit as its own step makes it a little easier to read the sequence. This will also demonstrate the concept of using a variable, which we have not talked about yet. Here is the process to move the Sine Amplitude and Frequency Test limits to their own sequence steps:

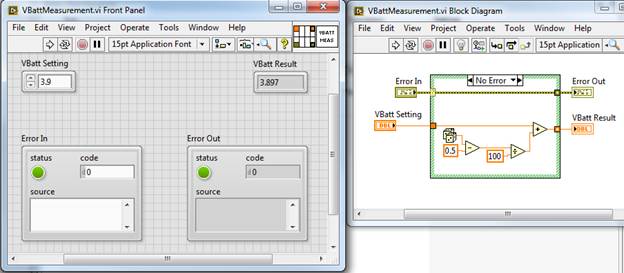

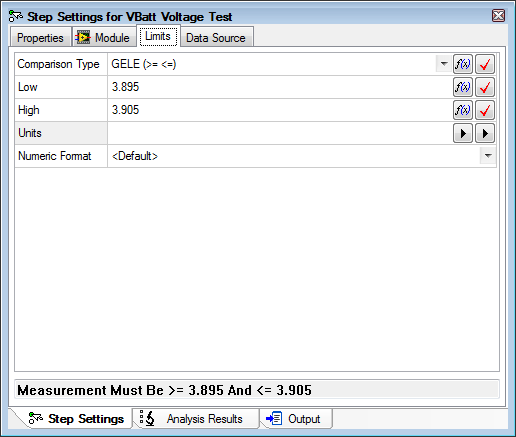

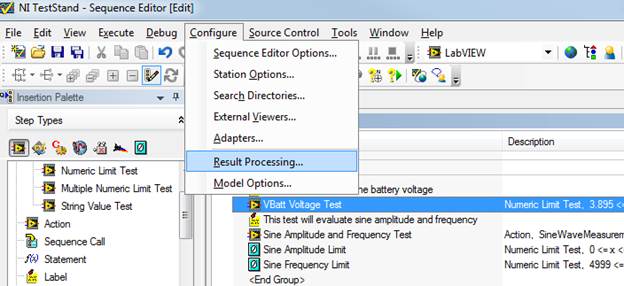

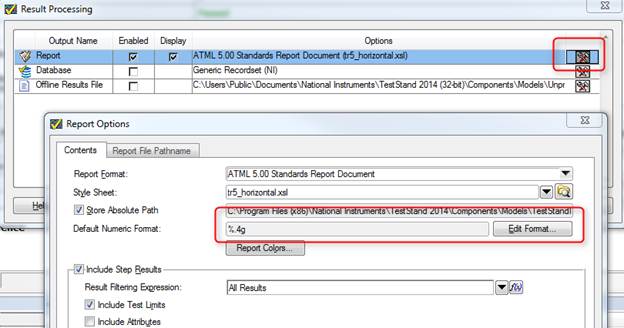

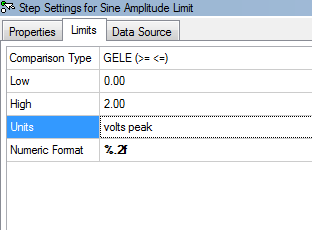

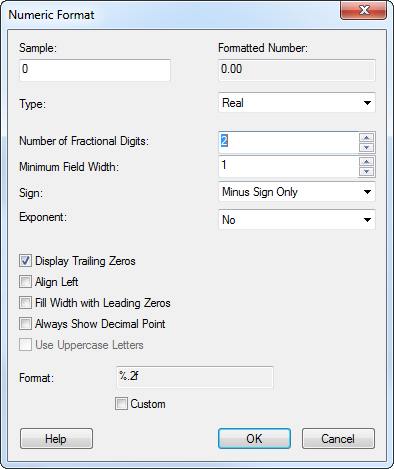

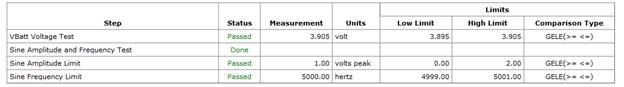

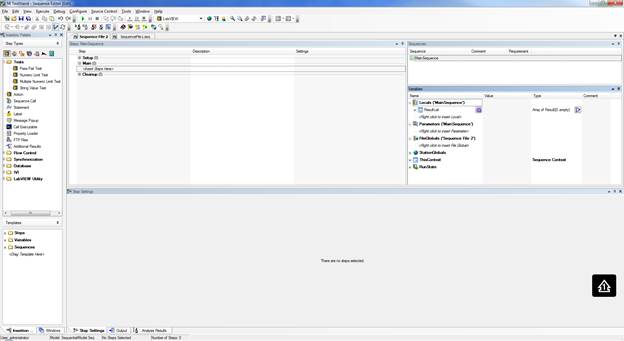

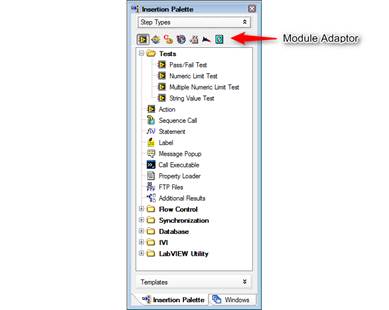

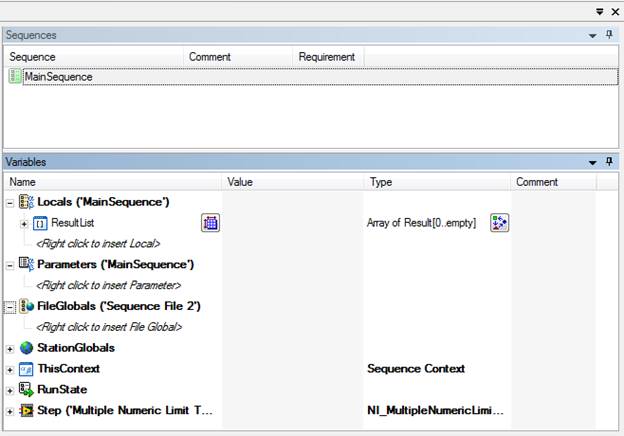

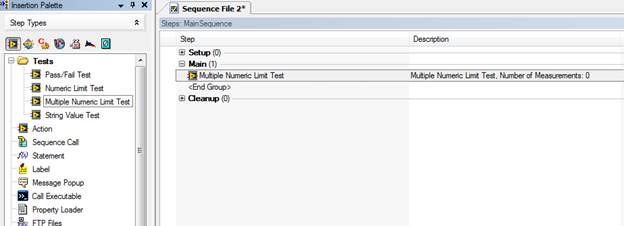

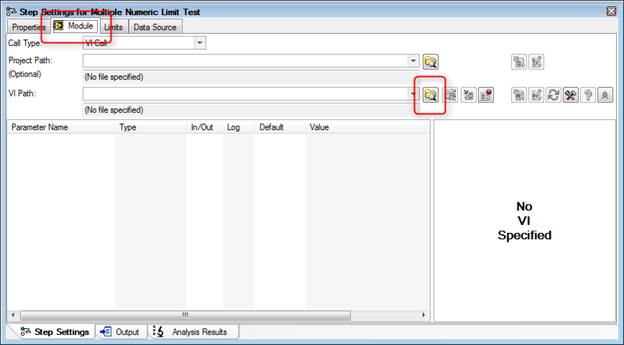

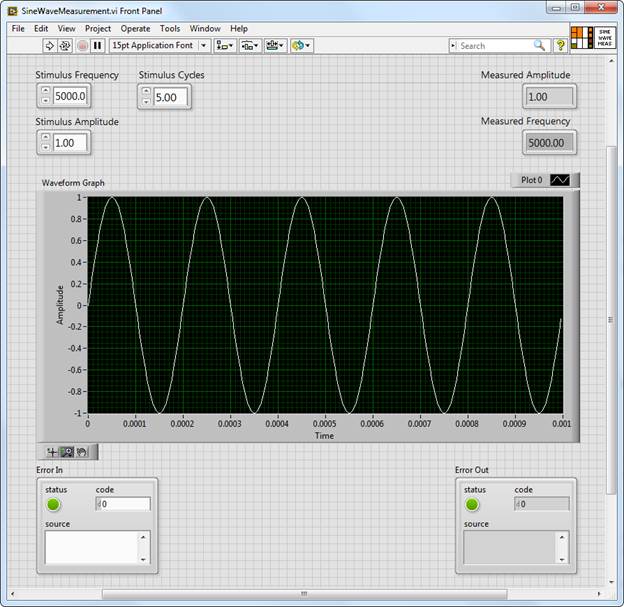

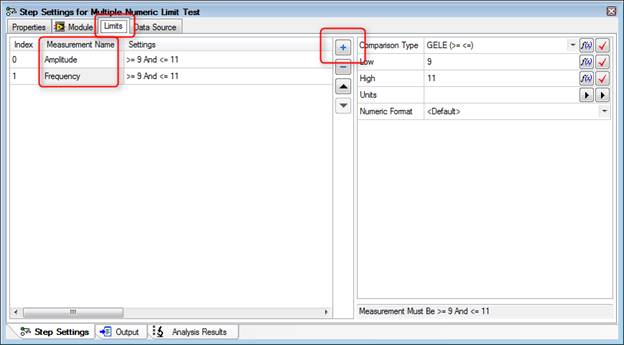

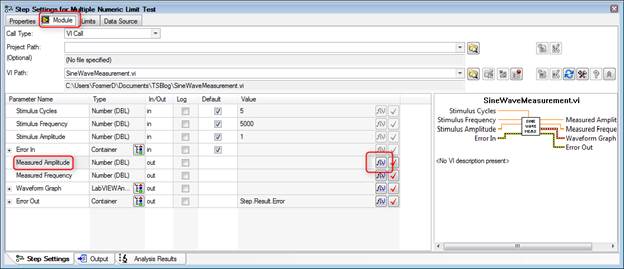

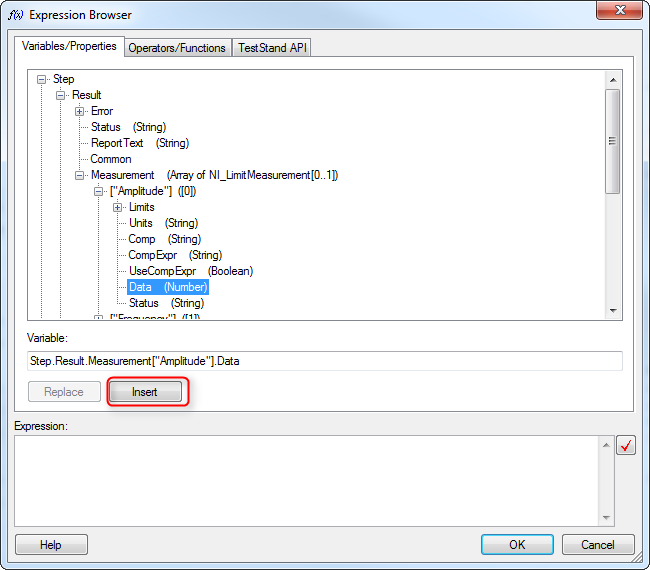

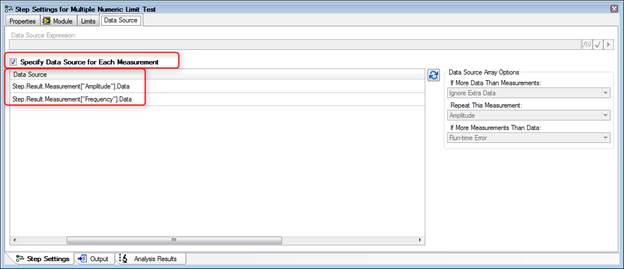

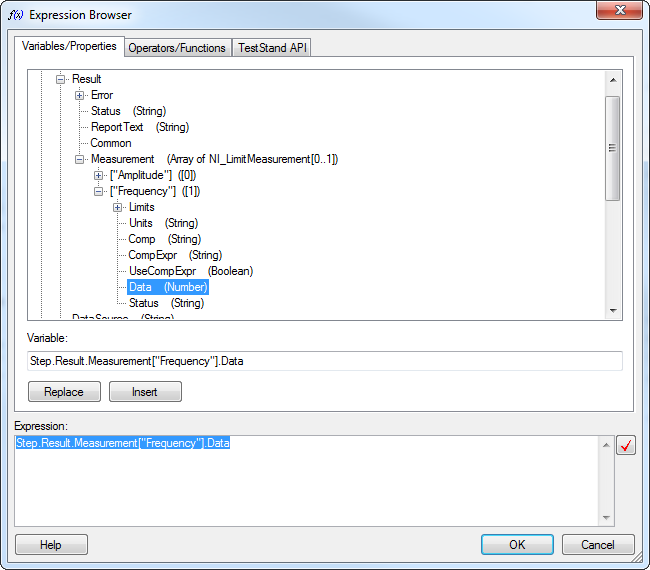

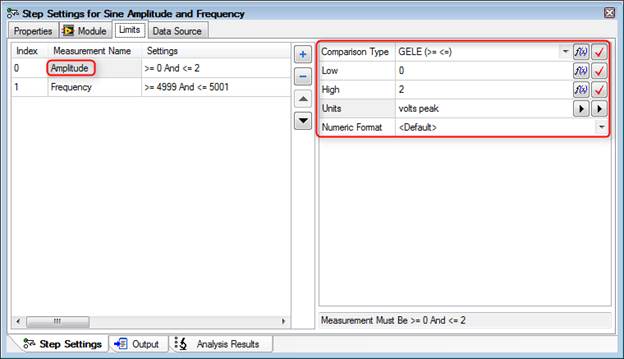

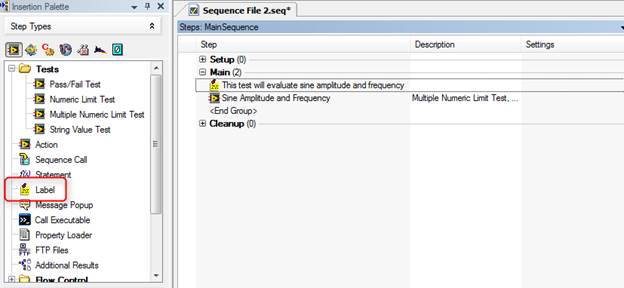

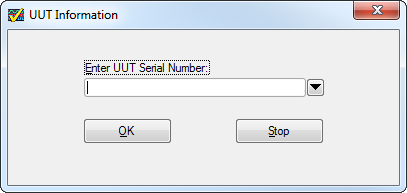

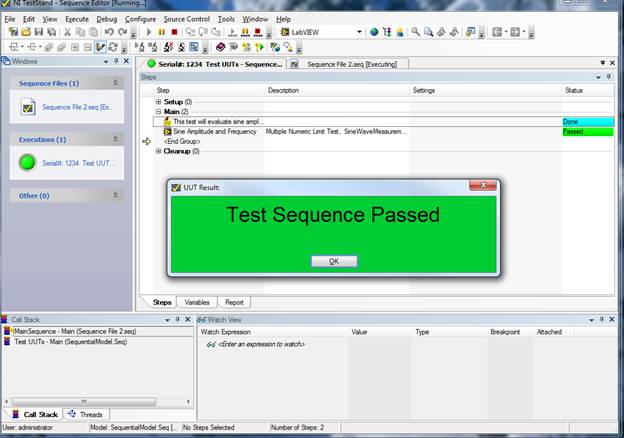

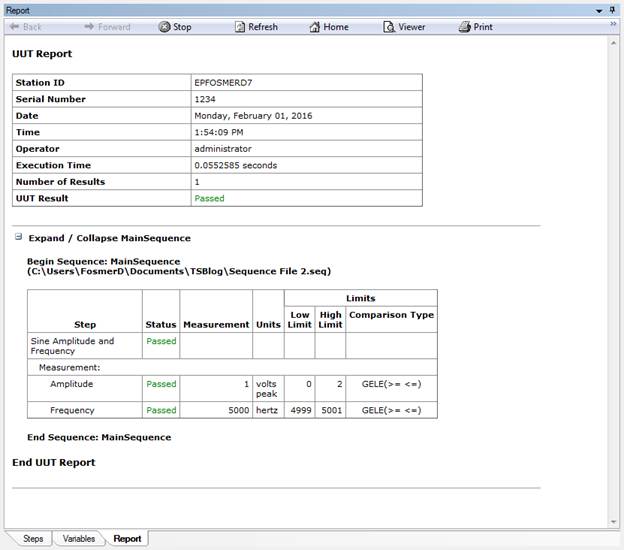

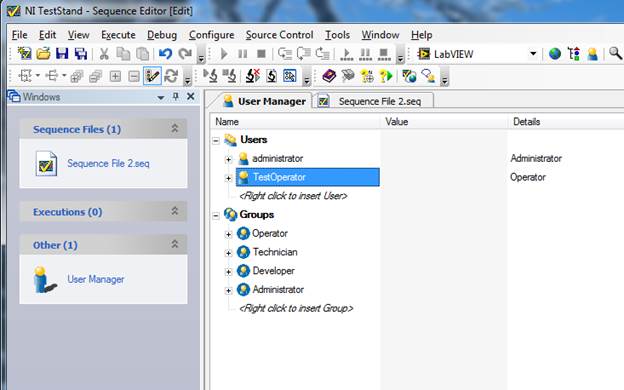

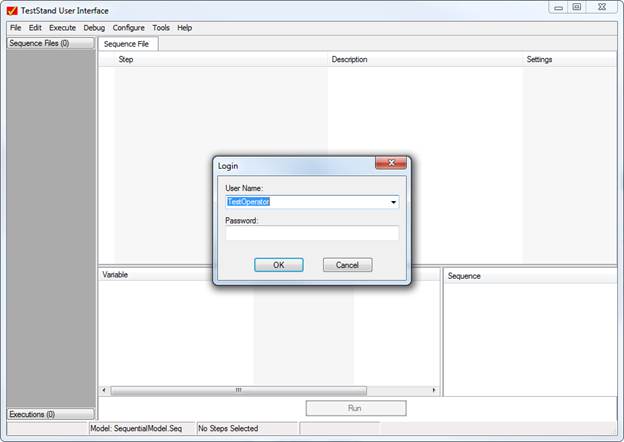

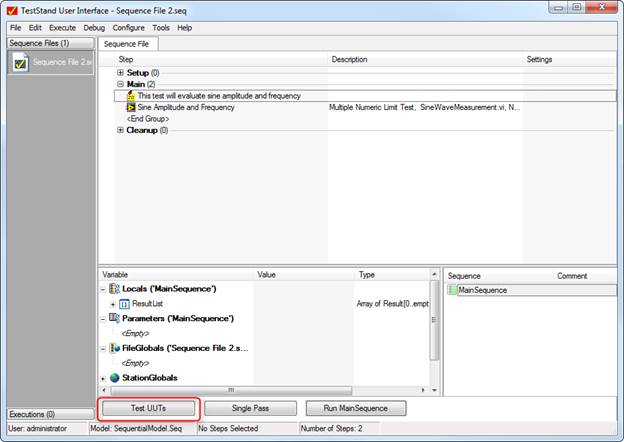

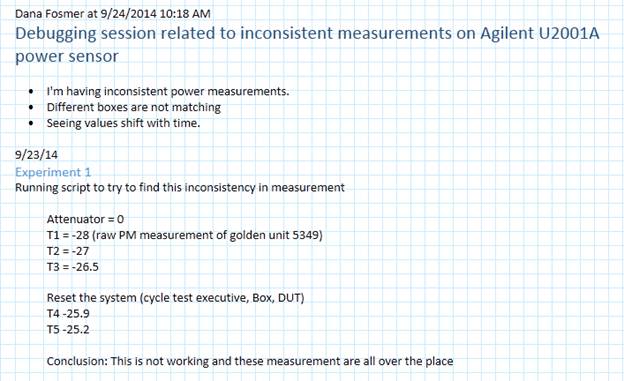

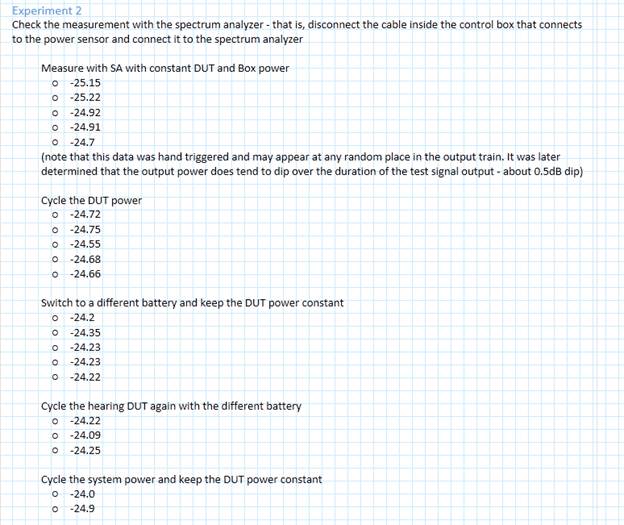

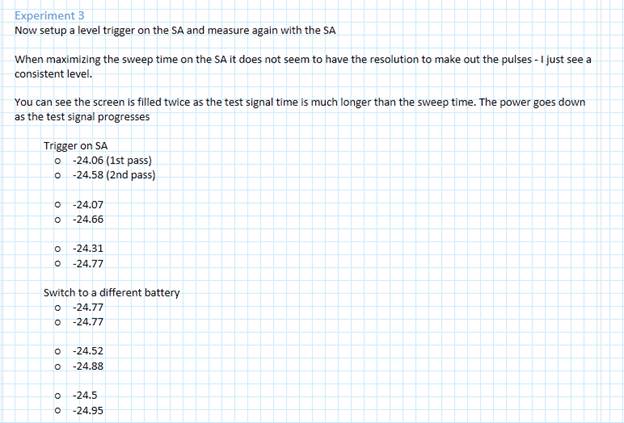

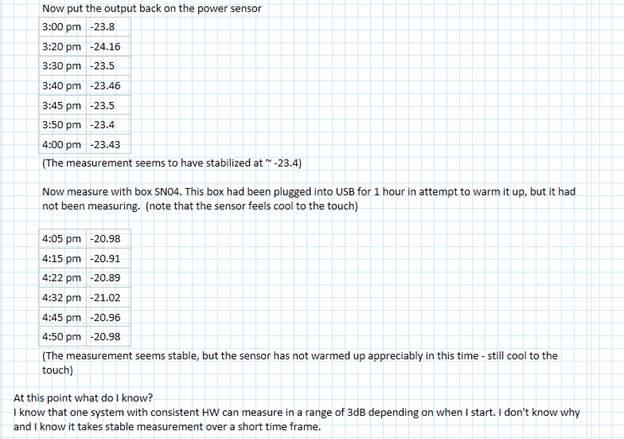

Now if we run the sequence you can see in Figure 14 that it still passes. Improving the format of the test reportOne thing I noticed in the report is all those digits in the VBatt Voltage Test and the Sine Amplitude and Frequency are not in the correct format. If I go to Configure -> Results Processing I can change the way numbers are reported on the report. Figure 15. In the results processing dialog if I click on options then I can edit the number of digits on the report as shown in Figure 16. The default number of digits is 13. I also noticed that the limits on amplitude and frequency should have some digits. Go into the limit step and add a custom numeric format. I also added a unit of measure, which makes it look a little better, see Figure 17. We also need to go update the local variables to keep them from cutting off any digits. Right click on the amplitude and frequency variables and change them to Real with two digits of precision. Figure 18 shows the Numeric Format dialog box. I also went back and added a unit for the VBatt test. Now in Figure 19 our report is looking a little bit better. SummaryIn this post I showed some different methods to control the flow of the test with preconditions and post actions. I also showed how to use local variables rather than the automatic results built into TestStand to apply limits to tests. Finally, I showed a couple little things to format the test report. Source CodeFind the code for this post here RelatedIntroductionOver the last year or so I’ve been working with NI TestStand quite a bit. While, I’ve worked with LabVIEW for several years, I have not had much exposure to TestStand. I’m hoping to write a blog series on TestStand to aid in my learning. When I started using TestStand my first question was, “how do I use this to make the creation of a test system faster?” I wanted to use LabVIEW and get a test system up and running quickly but I didn’t have any test executive infrastructure in place. That is I just wanted the operator interface, test sequencer, and report generation done so I could focus on tests. Well, that’s what TestStand is supposed to do and I was going to figure out how to make it do it. Unfortunately, I didn’t know much about TestStand and I had to figure out how to do all the basic stuff. NI always has lots of training materials available, but I just wanted to know how to get something super simple going, and I couldn’t quite find the help I wanted. If you start with an empty sequence file it looks like Figure 1. Navigating the TestStand Sequence EditorOn the left is the Insertion Palette, note in Figure 2 I’ve pointed out the Module Adaptor selection area. I have mine set to LabVIEW as I want to call my labview code on each test step. Other choices are C/C++ dll or .NET. Simply click on these to change the adaptor. There’s lots of other useful stuff in the Insertion Palette I’ll talk about later. Another area of interest is the Steps Pane. That’s right in the middle and controls your test steps and test flow. Figure 3 shows the Steps Pane. I think the Steps Pane is technically held in the Sequence File Window. I don’t know, these terms aren’t that important. Off to the right is the Sequence Pane and the Variables Pane. Figure 4 shows the variables pane. You’ll see that dealing with all these panes and windows can get to be a pain (I’m so funny). There are a lot of ways to move and dock all these windows. You can even pull some of them out of the sequence editor onto the Windows desktop on another monitor. So play around with it. Using a Test AdaptorNow let’s say we have some LabVIEW code and we want to run it in TestStand. Based on the code, we will pick one of the standard LabVIEW test adaptors. In this case I’ll chose Multiple Numeric Limit Test since my VI has multiple outputs that I want to evaluate with a limit and it’s probably the most useful one. In Figure 5 I dragged the Multiple Numeric Limit Test from the Insertion Palette to the Steps Pane under the Main section. Clicking on the new Multiple Numeric Limit Test, we can see in Figure 6 that the Step Setting pane is now populated with several tabs and settings options. I’m going to click on the Module tab and browse for the VI I want to run. The VI I am loading is just a simple sinewave generator I wrote up quick in LabVIEW. Its outputs are the sinewave amplitude and frequency. I’m going to pretend that this is my test data. See Figure 7. Now that the VI is loaded into the test sequence let’s setup TestStand to run the VI and apply limits to the measurement results. There are multiple ways to do this, the first way I know is to use the built-in results collection of TestStand. Start on the Limits tab shown in Figure 8, and use the plus sign to add two measurements. Change their names to “Amplitude” and “Frequency” or whatever makes sense. Next go to the Module tab shown in Figure 9 and click on the Expression Browser button in the Measured Amplitude parameter row. Once you click on the Expression Browser button you’ll see the Expression Browser dialog box shown in Figure 10. What we are trying to do is map the output of our VI to the result collection measurement name we created when we added the amplitude and frequency limits. Browse into Step -> Result -> Measurement -> [“Amplitude”] ([0]) -> Data and then click on Insert and OK. Go to the Data Source tab as shown in Figure 11 and click the check box called Specify Data Source for Each Measurement. Under Data Source there are two lines one for the amplitude and one for the frequency measurement. Here we are connecting our data from the TestStand results collection to the limits to be evaluated. Figure 12 shows how to use the expression browser to create the statements shown in Figure 11. Now go back to the Limits tab shown in Figure 13 and set the limit span. In my VI, the default amplitude is 1 and the frequency is 5000. As you can see there are several options for one or two sided limits, inclusive and so on. I chose GELE and selected the units. Returning to the Steps Pane, name the step something like “Sine Amplitude and Frequency.” Also, drag a label from the Insertion Palette as a way of documenting this step. The Label step type is shown in Figure 14. Execute the SequenceWe are actually pretty close to a complete test system at this point. It might not test much but we can now enter a DUT serial number, execute the sequence and get a report. In the Sequence Editor, click Execute-> Test UUTs, the UUT Information dialog box in Figure 15 will appear to enter a serial number for the part. Also, if you have a UBS bar-code scanner attached to the system it will automatically accept the input into this dialog box field. I didn’t know this, but a USB bar-code scanner will read text into most software, it’s just like a keyboard or mouse. You can even scan right into Excel. Type something in there like “1234” and click OK. TestStand will execute will show that the test sequence passed. The little dialog box that pops up that says “Test Sequence Passed” is shown in Figure 16, this dialog is controlled and created by the process model (so is the UUT Information, for that matter). Click OK on the UUT Result dialog and click Stop on the UUT Information dialog box. The test report will pop-up and you can see all the information and measurements that are collected. It’s a pretty good report right out of the box. Figure 17 shows an example of the report. Each time the test is executed the report will be saved in xml format to the directory in which your sequence file is saved. One last step to creating a fully functional test system is adding a user interface. The TestStand sequence editor allows you to run the sequence and your test, but it’s not setup to do real testing by a test operator. TestStand comes with premade test system user interfaces to solve just this problem. Before we look at the user interface we have to create a user with limited permissions to be our operator. In the sequence editor click View -> User Manager. Under the Users section right click and add a user called TestOperator. Right click this new user and select Properties, and click Operator. See Figure 18. Save the new user setting and close the User Manager. Note that the User Manager changes are a station setting and live with the installation of TestStand on the computer you are using, and are not saved into the sequence file. Go to this path C:\Users\Public\Documents\National Instruments\TestStand 2014 (32-bit)\UserInterfaces and you will find two folders, Simple and Full Featured. In these folders you’ll find the premade user interfaces in a variety of languages. If we go in Full Featured and LabVIEW you’ll find a file called “TestStand 2014 (32-bit) LabVIEW UI – Operator” this is the LabVIEW implementation of the user interface. From this interface you can then load your test sequence file and run it in a production environment. As shown in Figure 19, open the file and login as the new user TestOperator, then open the sequence file that we built and you are ready to test by clicking the Test UUTs button shown in Figure 20. SummaryTestStand is a great tool to building a simple test system quickly as it handles a lot of the infrastructure for you like, user interface and reporting. This was the super basic stuff to load a LabVIEW VI and run it in the TestStand sequencer. TestStand can sort-of be as sophisticated and complex as you want it to be as you become more of a power user. I’m still a beginner, but I will keep writing as I learn and hopefully it will be a benefit to some others and I’ll remember how to do stuff the next time. RelatedI have previously written about debugging, but that was more of a high-level abstract view. I think this is really important topic for test engineers and engineers in general. I am often disappointed in my own debugging skills, but also I have met very few people who are very good at it either. A tool I’ve been trying to use a lot for debugging lately is the Microsoft program OneNote. OneNote is great and I try to use it to write everything down. This post is a recent example of how I use OneNote to aid in my debugging. To set things up, I was having a problem with a test system I've been developing where measurements (in this case an RF power measurement taken with a USB power sensor) seemed to be working well in the short term and drifting in the long term. The first thing I did was to take a few repeated measurements and try to get a feel for this problem. It seems to me that the first part of debugging a problem is just sort-of playing around for a while to start to get a sense of it. The trouble with this is it’s easy to get stuck in this mode. This is where forcing myself to write things down in OneNote helps. It speeds up the transition from just playing around to drawing conclusions and start logically narrowing down the problem to the root-cause. Looking at Figure 1, you can see that first thing I always do before I write something in OneNote is to right click and select the last option in the menu which is to insert your name, date and time. Next, I put some kind of title using the heading 1 style and write a few bullet points about what I’m doing. Using the heading 2 style, I break the notes up further into separate little experiments. I don’t really have anything that defines an experiment, it just sort-of breaks up the notes into how the thought process goes. I then start taking some measurements and recording the results. I’m not that careful about recording all details of the experiment, like the equipment I used and while that would be a good addition, I just use the notes to aid in my thinking through the problem. As you can see, the measurements start at -28 dBm and rise to -25.2 dBm. I would expect them to stay within about 0.1 dBm. A couple more notes, when I refer to “box” that is basically the test system and “golden unit 5349” is a known good and characterized DUT that I can trust. At the end you can see I wrote a little conclusion for myself that basically just says, this isn’t doing what I would expect I have to keep debugging. What’s important about writing a conclusion here is that it forces me to stop and think of how to isolate what is going on. I could easily continue making this measurement all day and not learn anything more. This is what I was talking earlier, about getting a feel for the problem. You have to start somewhere but also focus on how to quickly move past this stage. Continuing on, Figure 2 shows the different configurations I went through to try to recreate the variation. You can see I tried cycling the DUT power (the DUT is battery powered and the battery voltage can have an effect on the output power) and different batteries. If you look carefully at some of the text you'll notice it was not written at the same time the measurements were taken. I went back at a later time to add this text as I learned more about how to properly setup and measure with the spectrum analyzer. As you can see, the measurements with the spectrum analyzer were pretty consistent. In Figure 3 I’m still making the same measurement with the spectrum analyzer, but now I’ve setup a trigger so those measurements should be more repeatable and comparable. Figure 4 shows more of the section labeled “Experiment 3.” I’ve now switched back to the power sensor and am spacing out the measurements over a longer time and recording when they were taken. At this point, I was thinking that the length of time the system sits idle had some effect on the measurement and usually if a measurement changes over time it’s due to some sort of environmental factor. I just know this from experience and that’s why I’ve also started noting the general temperature of the power sensor. I know these power sensors can get quite warm. Towards the end, I added measurements from a second test system to look for variation due to cold versus warmed-up power sensors. Figure 5 is the last of the measurements, you can see that I continue to track the time of each measurement and there are two big surprises related to the measurement. The first surprise is the first line, the measurement is now 2.57 dBm lower on the same setup than it was just an hour ago. The second big surprise is the power drops a ridiculous 17.24 dBm after the system is left powered overnight.

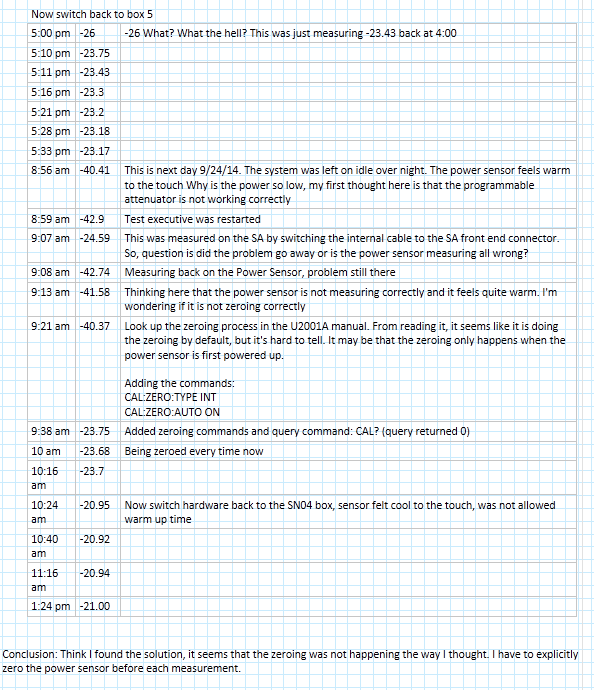

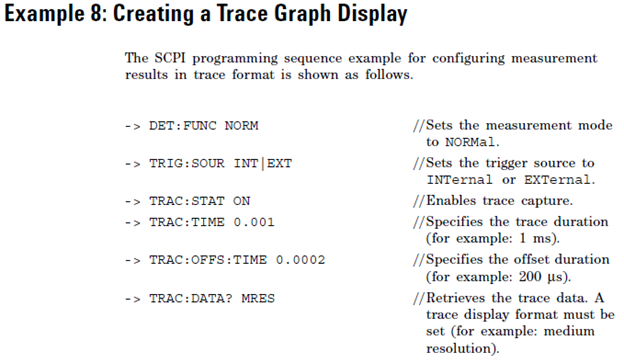

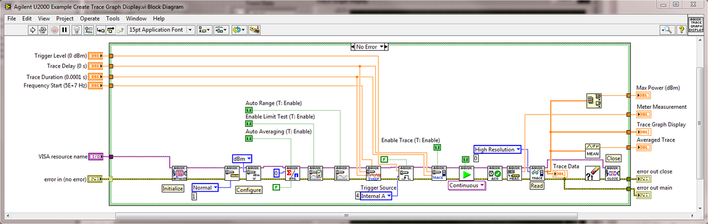

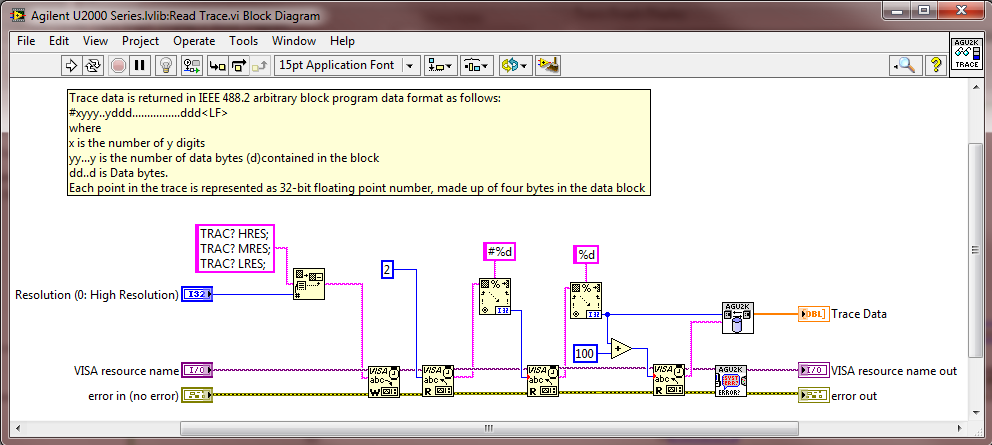

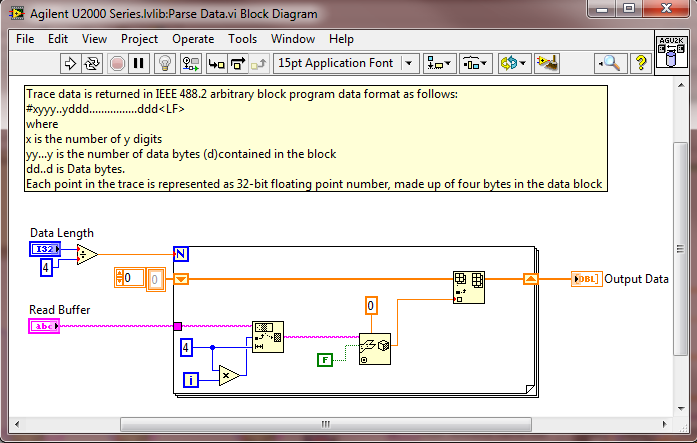

The big breakthrough was when the power sensor was consistently measuring down at -40 and the spectrum analyzer was still measuring up at -24. This told me that the power sensor was the problem and it was what was causing the measurement variation. This made me feel pretty stupid, as I had been working with this power sensor for a while and its measurements were apparently useless (but I was making progress). At this point I had identified a problem and need to find a way to fix it. I did know that power sensors need to be zeroed before taking measurements, but I had thought I had read in the instrument manual that this was done automatically when the sensor was powered up or the temperature changed by 5 degrees. I decide I could verify the zeroing had been done by explicitly adding the commands to zero it before each measurement. Well as you can see in Figure 5, the measurement snapped right back into line with the spectrum analyzer once the zeroing had been done. I then wrote my final conclusion that this was a big source of the variation in question and the power sensor should be zeroed before every measurement. My conclusion was temperature change was the source of variation and it was not being accounted for. Summary In this article I went through my process for debugging a real problem I had and showed how writing aided in that debugging. I’m not so much trying to show what a genius debugger I am, but that my debugging gets way better if I force myself to write things down as I go. I think Microsoft OneNote is a great tool for this purpose. Recently I’ve been working with the Agilent U2001A power sensor. The U2001A is an averaging power sensor which simply means when the instrument is queried, that result is an average of multiple samples. If you want to easily measure peak power, you have to purchase the U2020 series power sensor, which costs more. However, the U2000A averaging sensor can be configured to create what is called a trace graph display, which effectively queries all of the individual points that go into the averaging the sensor is performing. Finally, software can be used to find the peak power measurement or simply display the measurement like a digitized waveform. To determine how to create the trace graph display I used the U2001A programming guide and the U2000 Series Labview driver from NI. The Agilent programming guide provides the example code, shown in Figure 1, to create a trace graph display. This code is basically correct, but I feel like they left out a couple things. First of all I found that the trigger requires a little more explanation. The best way I found to do it was to trigger on a power level, their example doesn’t have this code. If you do not specify a trigger level it will just trigger immediate, but this doesn’t give as much control in some situations. Another thing I struggled with was how to decode the data returned by the instrument. The data is returned from the instrument in something called the IEEE 488.2 arbitrary block program data format. It took me a little while to find it, but the LabVIEW driver includes a VI to decode this data. I’m just pointing this out because the example from the programming guide doesn’t go into these details. Figures 2 and 3 show the LabVIEW code. The actual code is also available. A couple of things to note in Figure 2, the trigger level was set to -30 dBm. You can see from the graph that the acquisition begins at -30 on the rising edge of a pulse, there is a flat portion, and falls back into the noise. Also note the VI outputs: “Averaged Trace” is the average of everything on the graph, “Meter Measurement” is the average returned by the meter (I’m not totally sure why that doesn’t match the average computed in the software – I think the points acquired and the measurement points must not match) and “Max Power (dBm)” is the peak value found in the graph array data. Figure 3 shows the block diagram of the example LabVIEW code. This code has quite a bit more configuration than the example code from the programming guide in Figure 1. Figure 1 shows the critical command and many of the extra commands I have in the LabVIEW code can be stripped out as they are just resetting the default values – I just felt this was more complete. As I mentioned earlier, one nice thing in this code is the U2000 driver implements the code to read the trace data. This capability is in the VIs “Read Trace.vi” and “Parse Data.vi” which are shown in Figures 4 and 5. You can also see that the driver has a comment with some details about the IEEE 488.2 data format. One strange thing I found with this code is it seemed to work great if I kept the sub-VI “Wait for Acquisition Complete.vi” open, but if it was closed it seemed like code would hang-up a lot. Not sure what that is all about.

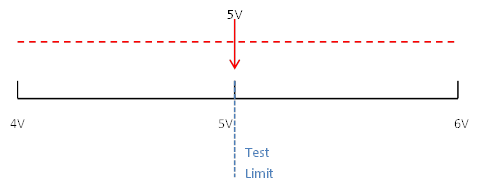

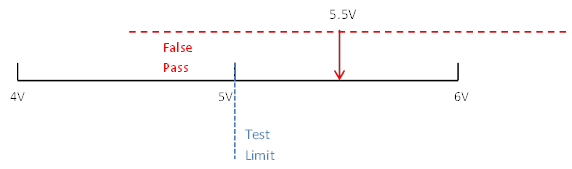

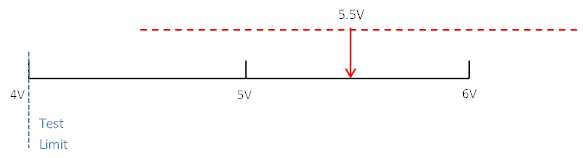

I posted this example code and you can download on this page, it is LabVIEW 2012. Note that I’m not providing the U2000 driver, get that from NI at the link above. Summary This post shows how to implement an Agilent U2001A power sensor trace graph display in LabVIEW. I feel this code provides a slightly more complete solution than the example code provided in the Agilent Programming Guide. Another useful feature of this code is it makes it possible to find the peak power of a measurement using the average power model power sensor. Guard-banding is the concept of tightening test limits by the amount of your measurement accuracy in the measurement system in order to prevent bad DUTs from being mistakenly passed. For example, let’s say you are taking a measurement with a specification limit of 5 volts and the measurement system being used to measure a DUT has a measurement accuracy of 1 volt. To make sure that a DUT with a true value greater than 5 volts does not pass, the limit should be guard-banded by the 1 volt measurement accuracy and set at 4 volts. Here is how this works. You have a DUT with a true value of 5 volts that could be measured anywhere from 4 to 6 volts due to measurement accuracy of 1 volt. See Figure 1. If the test limit is set at 5 volts according to the specification, then half of the time this DUT will pass and half of the time this DUT will fail. This is an example of a DUT we would want to pass, but might false fail. Now let’s say that there was a DUT with a true value of 5.5 volts. We would never want this DUT to pass but it would pass 25% of the time. See Figure 2. To avoid the potential of a false pass a guard-band can be applied to create a test limit of 4 volts. Now, with the limit at 4 volts the DUT with a true value of 5.5 volts from Figure 2 can never pass. See Figure 3. It’s pretty easy to see that applying a guard-band will potentially fail more good parts. This highlights the importance of knowing where your measurement population is compared to your test limit and having an accurate measurement system. This brings up the concept of CPK which is a measurement to determine if your measurement population is too close to your limit.

|

Archives

December 2022

Categories

All

|

RSS Feed

RSS Feed